Like everyone and their brother, I’ve been playing with ChatGPT and asking it life’s essential questions. It confidently tells me things, and some of those things are incredibly insightful. However, people and chatbots that are wrong often state things confidently.

IDG

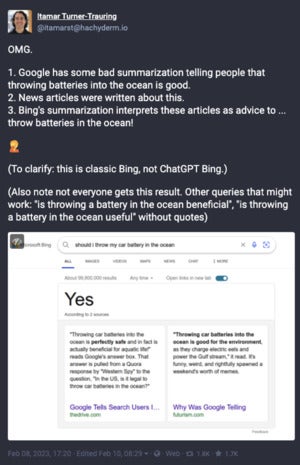

IDGI saw a recent Google Answer posted on Mastodon. In this “answer,” Google suggested that there were benefits to disposing of used car batteries in the ocean, including charging electric eels and powering the Gulf Stream. Bing then picked up this answer and also suggested the environmental benefits of illicit battery disposal. I wonder if Google realizes you can use motor oil to fertilize your lawn. (Author’s note: please don’t throw batteries in the ocean or fertilize your lawn with motor oil.)

Presumably, people searching on this are looking for the joke, but it raises an interesting question. As more people use ChatGPT and publish their results on the web, and more AI question answering systems pick up their own generated responses and those of others and train on it—what will happen?

I did what anyone would do for a question they don’t know the answer to. I asked ChatGPT, “What will happen when people publish the output of ChatGPT and it is trained on its own responses?”

The bot replied:

If people publish the output of ChatGPT and use it to train the model further on its own responses, this is known as self-training or self-supervised learning. In this scenario, the model will continue to learn from its own output and potentially improve its performance over time.

However, there are some potential risks to consider when using self-training. One risk is that the model may overfit to its own output, meaning that it becomes too specialized in responding to the types of questions and statements that it has previously encountered. This could lead to the model performing poorly on new or unseen data.

Another risk is that the model may start generating nonsensical or inappropriate responses if it is not properly monitored and supervised during self-training. This could be due to the model picking up on patterns or biases in its own output and amplifying them over time.

Therefore, it is important to use caution when self-training a language model like ChatGPT and to carefully monitor its performance and output to ensure that it continues to generate high-quality responses.

That points to some really scary social consequences since people seem to believe ChatGPT and think it is magically correct (as well as emotional). I wonder what the social consequences would be? I asked, “What could be the social effects of people depending on ChatGPT and it starting to produce nonsense results because it is trained on its own information?”

The robot said:

If people become overly reliant on a language model like ChatGPT and it starts to produce nonsensical results because it is trained on its own information, this could have several negative social effects.

Firstly, people may become frustrated or confused when ChatGPT provides nonsensical or inappropriate responses to their questions or statements. This could lead to a breakdown in communication and a loss of trust in the technology.

Secondly, if people rely on ChatGPT to provide important information or make important decisions, the consequences of the model producing nonsense results could be severe. For example, if a ChatGPT-based chatbot is used to provide medical advice or diagnose health conditions, relying on nonsensical responses could lead to serious health risks.

Thirdly, if ChatGPT is used to generate content for social media or other online platforms, it could lead to the spread of misinformation or fake news. This could have serious social and political consequences, as false information could be used to manipulate public opinion or undermine democratic processes.

In general, it is important to use language models like ChatGPT with caution and to monitor their performance carefully to ensure that they continue to produce accurate and appropriate responses. It is also important to recognize the limitations of these models and not rely on them as a substitute for human intelligence and expertise.

Oh, so we should use technology responsibly. I wonder what ChatGPT thinks we should do about that? I asked, “Given that humans have almost never used a new technology responsibly, what will be the likely outcome of this scenario?”

It replied:

While it is true that humans have often struggled to use new technologies responsibly in the past, it is important to recognize that each new technology is unique and may present its own set of risks and challenges. Therefore, it is difficult to predict with certainty what the outcome of a scenario involving a language model like ChatGPT would be.

That being said, there are steps that can be taken to minimize the potential negative effects of this technology. For example, developers and users of language models like ChatGPT can work to identify and address any biases or ethical concerns that may arise during the development and deployment of the technology. This could include implementing safeguards to prevent the spread of misinformation or inappropriate content, and ensuring that the model is trained on diverse and representative data.

Additionally, governments and regulatory bodies can play a role in ensuring that language models like ChatGPT are used responsibly and that appropriate safeguards are in place to protect the public. This may include regulations around the use of language models in specific industries or applications, and requirements for transparency and accountability in the development and deployment of these technologies.

In summary, while there are certainly risks associated with the use of language models like ChatGPT, it is possible to mitigate these risks through responsible development and deployment, as well as regulatory oversight and public awareness.

Oh wow, ChatGPT thinks it should be regulated? I emailed its creator, OpenAI, whether they agree, and if not, then what do they know that their language model doesn’t? They have not replied as of this publication.

We’ve long been self-trained to just “Google the answer” and now we may start training ourselves to “just ask ChatGPT.” However, it is important to remember that this tool is just sucking up some portion of the internet and regurgitating it back to you. ChatGPT can be a decent research tool, but it is garbage in and garbage out.

Like all technology this is BYOB. Bring your own brain and don’t feed car batteries to the eels.