In 1960, J.C.R. Licklider, an MIT professor and an early pioneer of artificial intelligence, already envisioned our future world in his seminal article, “Man-Computer Symbiosis”:

In the anticipated symbiotic partnership, men will set the goals, formulate the hypotheses, determine the criteria, and perform the evaluations. Computing machines will do the routinizable work that must be done to prepare the way for insights and decisions in technical and scientific thinking.

In today’s world, such “computing machines” are known as AI assistants. However, developing AI assistants is a complex, time-consuming process, requiring deep AI expertise and sophisticated programming skills, not to mention the efforts for collecting, cleaning, and annotating large amounts of data needed to train such AI assistants. It is thus highly desirable to reuse the whole or parts of an AI assistant across different applications and domains.

Teaching machines human skills is hard

Training AI assistants is difficult because such AI assistants must possess certain human skills in order to collaborate with and aid humans in meaningful tasks, e.g., determining healthcare treatment or providing career guidance.

AI must learn human language

To realistically help humans, perhaps the foremost skills AI assistants must have are language skills so the AI can interact with their users, interpreting their natural language input as well as responding to their requests in natural language. However, teaching machines human language skills is non-trivial for several reasons.

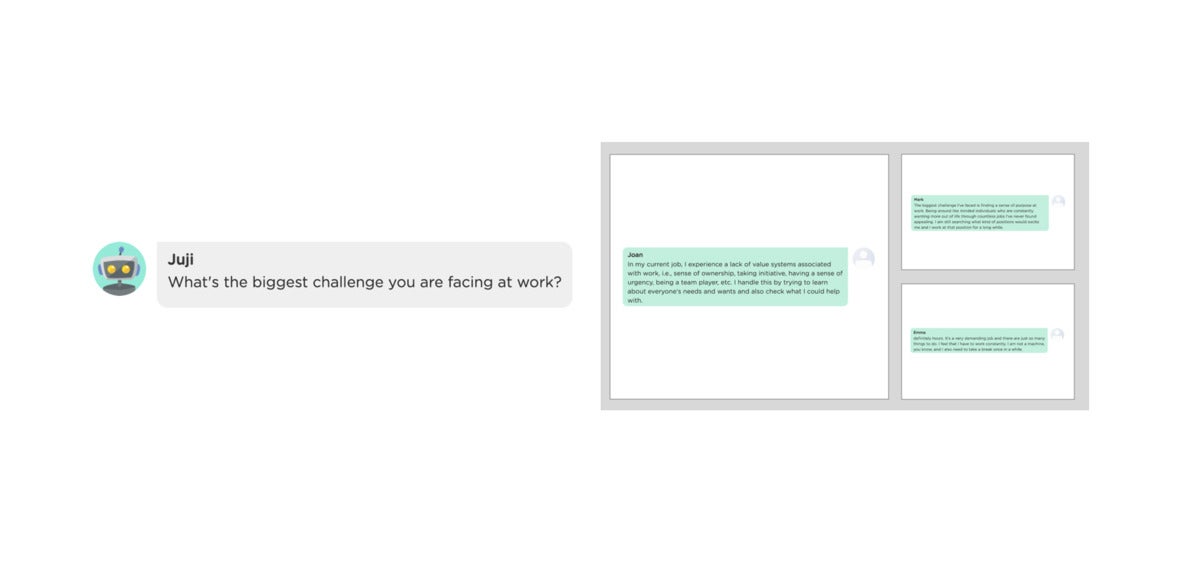

First, human expressions are highly diverse and complex. As shown below in Figure 1, for example, in an application where an AI assistant (also known as an AI chatbot or AI interviewer) is interviewing a job candidate with open-ended questions, candidates’ responses to such a question are almost unbounded.

Juji

Juji

Figure 1. An AI assistant asks an open-ended question during a job interview (“What’s the biggest challenge you are facing at work?”). Candidates’ answers are highly diverse and complex, making it very difficult to train AI to recognize and respond to such responses properly.

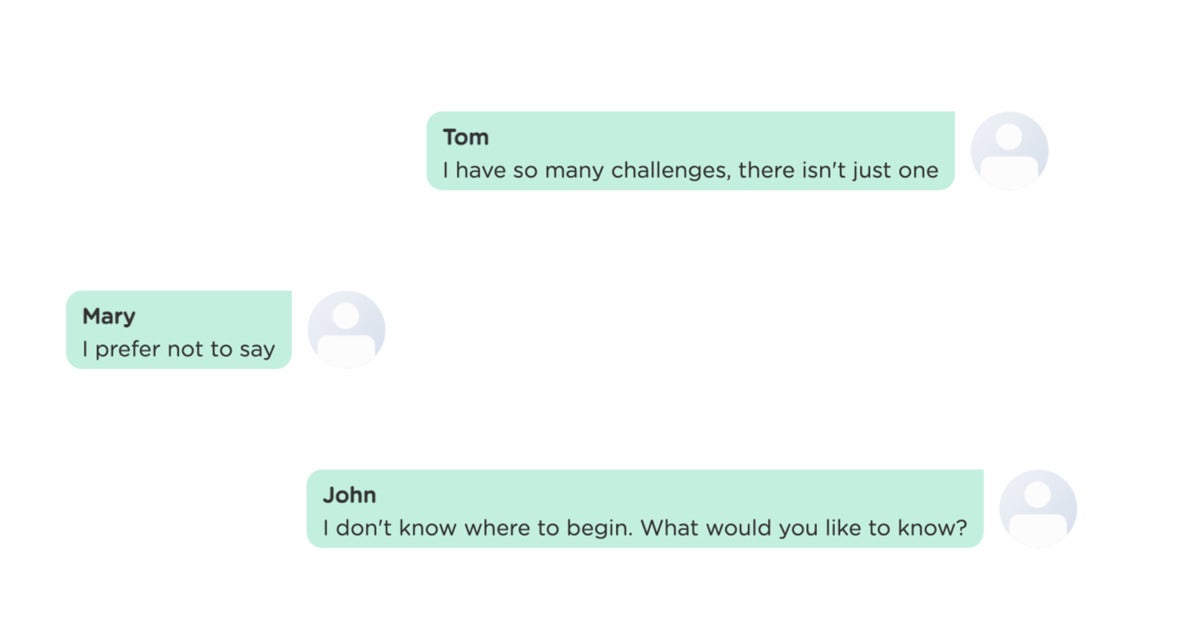

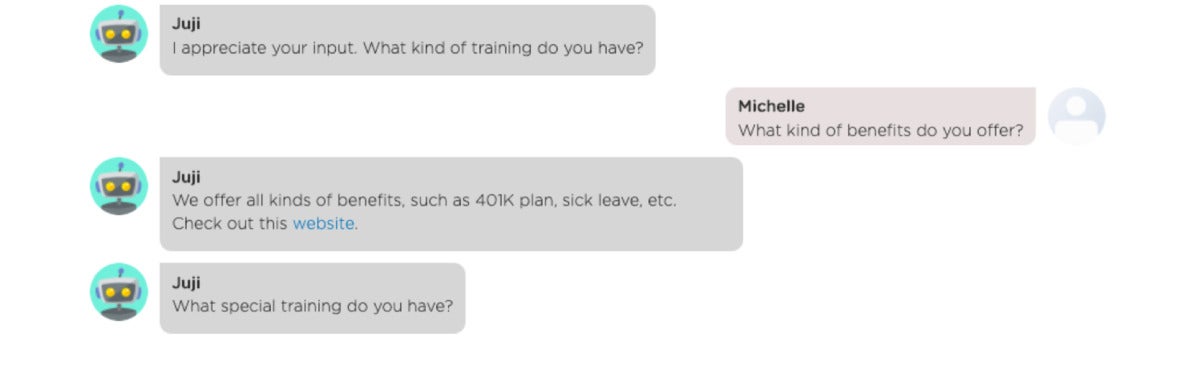

Second, candidates may “digress” from a conversation by asking a clarifying question or providing irrelevant responses. The examples below (Figure 2) show candidates’ digressive responses to the same question above. The AI assistant must recognize and handle such responses properly in order to continue the conversation.

Juji

Juji

Figure 2. Three different user digressions that the AI assistant must recognize and handle properly to continue the conversation prompted by the question, “What’s the top challenge you are facing at work?”

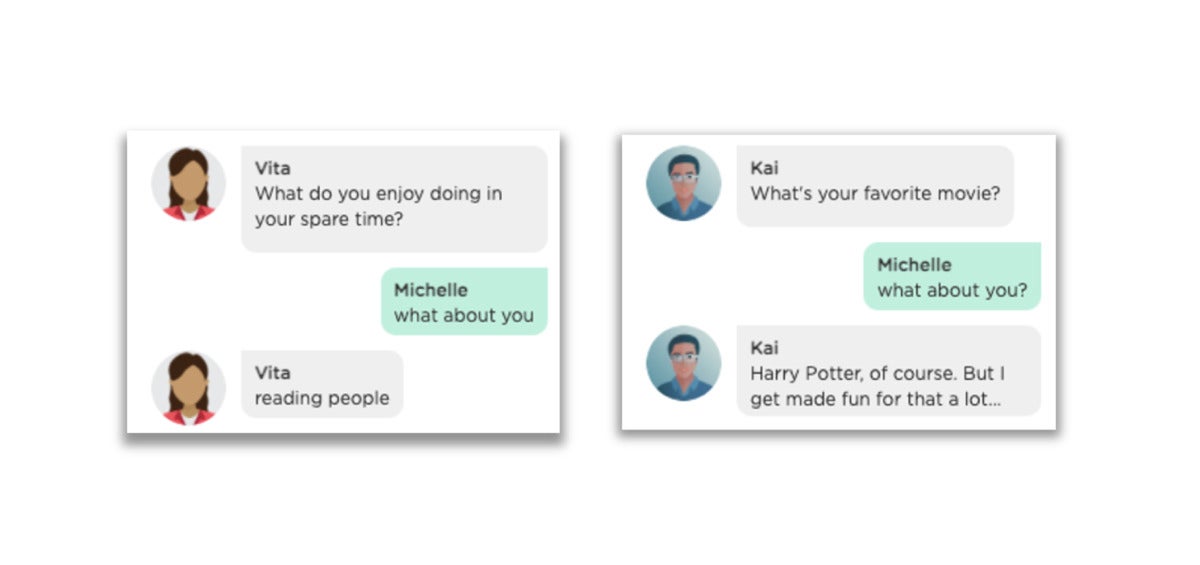

Third, human expressions may be ambiguous or incomplete (Figure 3).

Juji

Juji

Figure 3. An example showing a user’s ambiguous response to the AI’s question.

AI must learn human soft skills

What makes teaching machines human skills harder is that AI also needs to learn human soft skills in order to become humans’ capable assistants. Just like a good human assistant with soft skills, an AI must be able to read people’s emotions and be empathetic in sensitive situations.

In general, teaching AI human skills—language skills and soft skills alike—is difficult for three reasons. First, it often requires AI expertise and IT programming skills to figure out what methods or algorithms are needed and how to implement such methods to train an AI.

For example, in order to train an AI to properly respond to the highly diverse and complex user responses to an open-ended question, as shown in Figure 1 and Figure 2, one must know what natural language understanding (NLU) technologies (e.g., data-driven neural approaches vs. symbolic NLU) or machine learning methods (e.g., supervised or unsupervised learning) could be used. Moreover, one must write code to collect data, use the data to train various NLU models, and connect different trained models. As explained in this research paper by Ziang Xiao et al., the whole process is quite complex and requires both AI expertise and programming skills. This is true even when using off-the-shelf machine learning methods.

Second, in order to train AI models, one must have sufficient training data. Using the above example, Xiao et al. collected tens of thousands of user responses for each open-ended question to train an AI assistant to use such questions in an interview conversation.

Third, training an AI assistant from scratch is often an iterative and time-consuming process, as described by Grudin and Jacques in this study. This process includes collecting data, cleaning and annotating data, training models, and testing trained models. If the trained models do not perform well, the whole process is then repeated until the trained models are acceptable.

However, most organizations do not have in-house AI expertise or a sophisticated IT team, not to mention large amounts of training data required to train an AI assistant. This will make adopting AI solutions very difficult for such organizations, creating a potential AI divide.

Multi-level reusable, model-based, cognitive AI

To democratize AI adoption, one solution is to pre-train AI models that can be either directly reused or quickly customized to suit different applications. Instead of building a model completely from scratch, it would be much easier and quicker if we could piece it together from pre-built parts, similar to how we assemble cars from the engine, the wheels, the brakes, and other components.

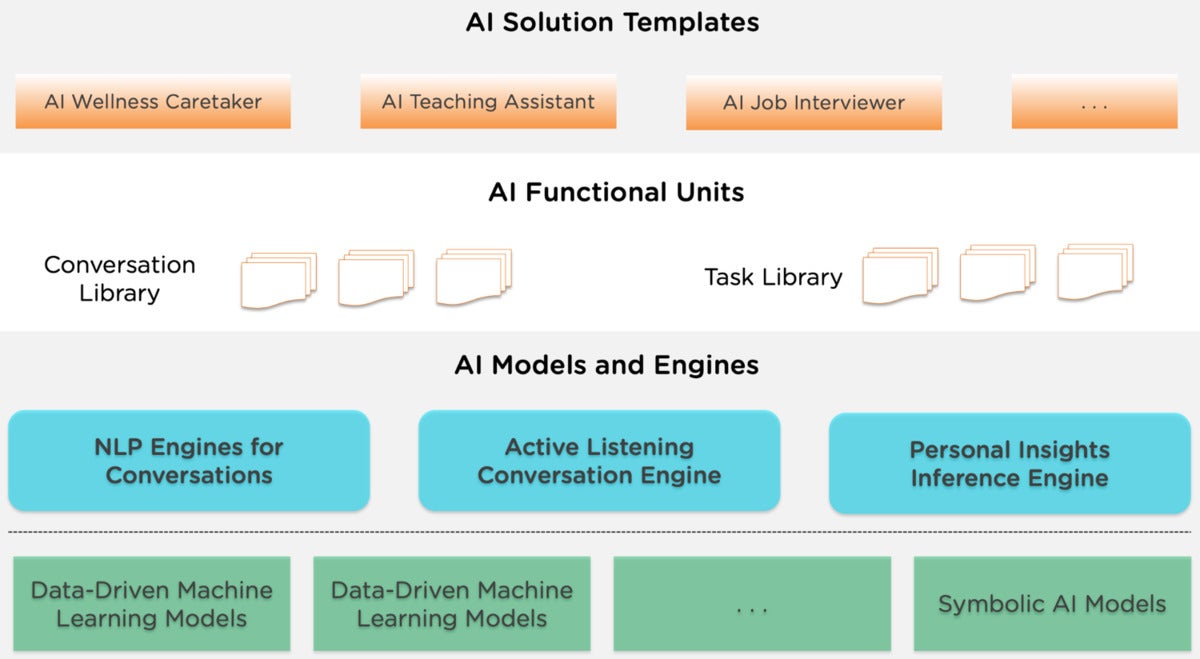

In the context of building an AI assistant, Figure 4 shows a model-based, cognitive AI architecture with three layers of AI components built one upon another. As described below, the AI components at each layer can be pre-trained or pre-built, then reused or easily customized to support different AI applications.

Juji

Juji

Figure 4. A model-based cognitive AI architecture with reusable AI at multiple levels.

Reuse of pre-trained AI models and engines (base of AI assistants)

Any AI systems including AI assistants are built on AI/machine learning models. Depending on the purposes of the models or how they are trained, they fall in two broad categories: (1) general purpose AI models that can be used across different AI applications and (2) special purpose AI models or engines that are trained to power specific AI applications. Conversational agents are an example of general purpose AI, while physical robots are an example of special purpose AI.

AI or machine learning models include both data-driven neural (deep) learning models or symbolic models. For example, BERT and GPT-3 are general purpose, data-driven models, typically pre-trained on large amounts of public data like Wikipedia. They can be reused across AI applications to process natural language expressions. In contrast, symbolic AI models such as finite state machines can be used as syntactic parsers to identify and extract more precise information fragments, e.g., specific concepts (entities) like a date or name from a user input.

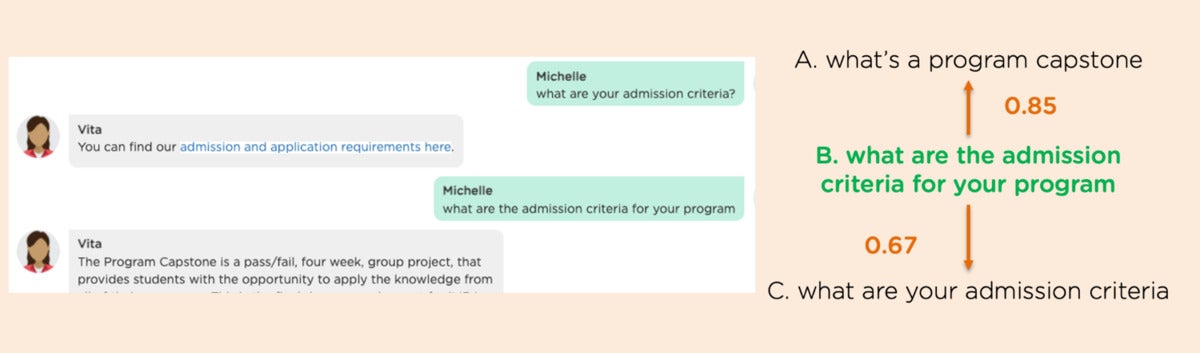

General purpose AI models often are inadequate to power specific AI applications for a couple of reasons. First, since such models are trained on general data, they may be unable to interpret domain-specific information. As shown in Figure 5, a pre-trained general AI language model might “think” expression B is more similar to expression A, whereas a human would recognize that B is actually more similar to expression C.

Juji

Juji

Figure 5. An example showing the misses of pre-trained language models. In this case, language models pre-trained on general data interpret expression B as being more similar to expression A, while it should be interpreted as more similar to expression C.

Additionally, general purpose AI models themselves do not support specific tasks such as managing a conversation or inferring a user’s needs and wants from a conversation. Thus, special purpose AI models must be built to support specific applications.

Let’s use the creation of a cognitive AI assistant in the form of a chatbot as an example. Built on top of general purpose AI models, a cognitive AI assistant is powered by three additional cognitive AI engines to ensure effective and efficient interactions with its users. In particular, the active listening conversation engine enables an AI assistant to correctly interpret a user’s input including incomplete and ambiguous expressions in context (Figure 6a). It also enables an AI assistant to handle arbitrary user interruptions and maintain the conversation context for task completion (Figure 6b).

While the conversation engine ensures a fruitful interaction, the personal insights inference engine enables a deeper understanding of each user and a more deeply personalized engagement. An AI assistant that serves as a personal learning companion, or a personal wellness assistant, can encourage its users to stay on their learning or treatment course based on their unique personality traits—what makes them tick (Figure 7).

Furthermore, conversation-specific language engines can help AI assistants better interpret user expressions during a conversation. For example, a sentiment analysis engine can automatically detect the expressed sentiment in a user input, while a question detection engine can identify whether a user input is a question or a request that warrants a response from an AI assistant.

Juji

Juji

Figure 6a. Examples showing how a cognitive AI conversation engine handles the same user input in context with different responses.

Juji

Juji

Figure 6b. An example showing how a cognitive AI conversation engine handles user interruption in a conversation and is able to maintain the context and the chat flow.

Building any of the AI models or engines described here requires tremendous skill and effort. Therefore, it is highly desirable to make such models and engines reusable. With careful design and implementation, all of the cognitive AI engines we’ve discussed can be made reusable. For example, the active listening conversation engine can be pre-trained with conversation data to detect diverse conversation contexts (e.g., a user is giving an excuse or asking a clarification question). And this engine can be pre-built with an optimization logic that always tries to balance user experience and task completion when handling user interruptions.

Similarly, combining the Item Response Theory (IRT) and big data analytics, the personal insights engine can be pre-trained on individuals’ data that manifest the relationships between their communication patterns and their unique characteristics (e.g., social behavior or real-world work performance). The engine can then be reused to infer personal insights in any conversations, as long as the conversations are conducted in natural language.

Reuse of pre-built AI functional units (functions of AI assistants)

While general AI models and specific AI engines can provide an AI assistant with the base intelligence, a complete AI solution needs to accomplish specific tasks or render specific services. For example, when an AI interviewer converses with a user on a specific topic like the one shown in Figure 1, its goal is to elicit relevant information from the user on the topic and use the gathered information to assess the user’s fitness for a job role.

Thus, various AI functional units are needed to support specific tasks or services. In the context of a cognitive AI assistant, one type of service is to interact with users and serve their needs (e.g., finishing a transaction). For example, we can build topic-specific, AI communication units, each of which enables an AI assistant to engage with users on a specific topic. As a result, a conversation library will include a number of AI communication units, each of which supports a specific task.

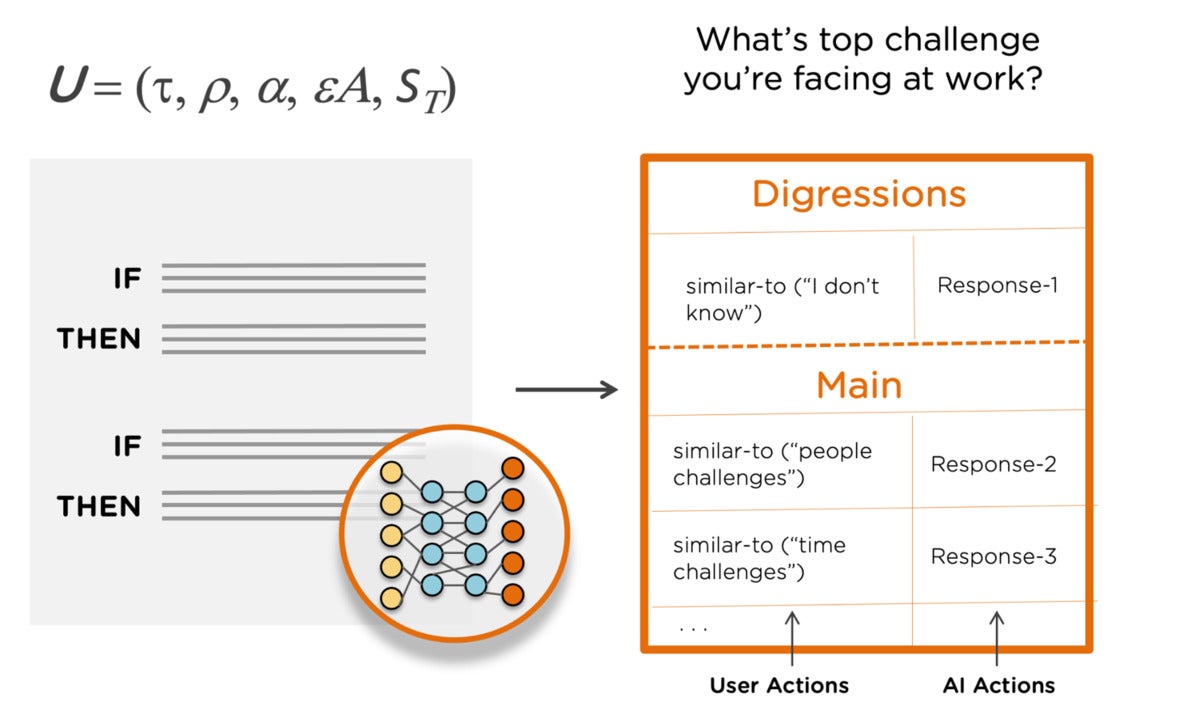

Figure 7 shows an example AI communication unit that enables an AI assistant to converse with a user such as a job applicant on a specific topic.

Juji

Juji

Figure 7. An example AI communication unit (U), which enables an AI assistant to discuss with its users on a specific topic. It includes multiple conditional actions (responses) that an AI assistant can take based on a user’s actions during the discussion. Here user actions can be detected and AI actions can be generated using pre-trained language models such as the ones mentioned at the bottom two layers of the architecture.

In a model-based architecture, AI functional units can be pre-trained to be reused directly. They can also be composed or extended by incorporating new conditions and corresponding actions.

Reuse of pre-built AI solutions (whole AI assistants)

The top layer of a model-based cognitive AI architecture is a set of end-to-end AI solution templates. In the context of making cognitive AI assistants, this top layer consists of various AI assistant templates. These templates pre-define specific task flows to be performed by an AI assistant along with a pertinent knowledge base that supports AI functions during an interaction. For example, an AI job interviewer template includes a set of interview questions that an AI assistant will converse with a candidate as well as a knowledge base for answering job-related FAQs. Similarly, an AI personal wellness caretaker template may outline a set of tasks that the AI assistant needs to perform, such as checking the health status and delivering care instructions or reminders.